Nvidia has unveiled its groundbreaking AI audio model, “Fugatto,” which pushes the boundaries of sound creation by synthesizing audio elements that have never existed. Unlike traditional AI models that generate speech or music from text prompts, Fugatto transforms a mix of sounds, voices, and music into entirely new audio experiences using advanced synthetic training methods.

A Glimpse into Fugatto’s Capabilities

While Fugatto isn’t available for public use yet, Nvidia’s sample showcase highlights its ability to manipulate distinct audio traits. From underwater voices to saxophones that “bark” and ambulance sirens singing in harmony, Fugatto is described as “a Swiss Army knife for sound.” However, results can vary in quality, reflecting the model’s innovative but experimental nature.

The Science Behind the Sound

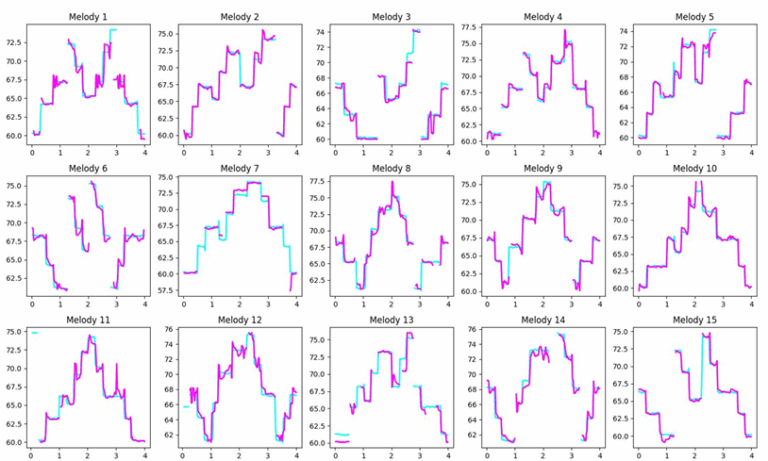

Nvidia researchers detailed the challenges of creating a training dataset that bridges audio and language. To achieve this, they used large language models (LLMs) to generate Python scripts that define “audio personas” and applied prompts to quantify traits like emotion, gender, and pitch. The process resulted in a massive dataset of 20 million annotated samples, representing over 50,000 hours of audio. This data enabled the development of a model with 2.5 billion parameters, trained on Nvidia tensor cores, delivering consistent audio quality.

ComposableART: A New Frontier in Audio Customization

Fugatto’s standout feature is its “ComposableART” system, which allows for the seamless blending of audio traits. It can generate unique outputs, such as a violin mimicking a baby’s laughter or factory machinery screaming in metallic tones. The ability to adjust individual traits on a continuum offers unprecedented control, from tuning accents to modifying emotional intensity in spoken text.

Transforming Creative and Practical Applications

Beyond artistic audio synthesis, Fugatto performs tasks like isolating vocals, adjusting emotional tones in speech, and enhancing music with rhythmic effects. Nvidia envisions applications in music prototyping, adaptive video game scores, and personalized advertising. However, the model is framed as a tool to complement human creativity rather than replace it.

As producer Ido Zmishlany puts it, “AI is writing the next chapter of music. It’s a new instrument, a new tool—and that’s super exciting.” With Fugatto, Nvidia is redefining the possibilities of sound and music in the digital age.

Introduction to NVIDIA’s AI Audio Model

NVIDIA has recently made headlines with the introduction of its groundbreaking AI audio model, a significant advancement in the field of audio synthesis. This innovative technology leverages the power of artificial intelligence to create rich, high-quality soundscapes that were once confined to the realms of imagination. The motivation behind developing this model stems from a growing demand for sophisticated audio solutions that can enhance various creative industries, including gaming, film, and music production.

The emergence of this AI audio technology reflects NVIDIA’s commitment to pushing the boundaries of what is possible in both artificial intelligence and audio engineering. By utilizing deep learning algorithms, the model is capable of generating audio that accurately mimics complex sound environments, offering creators a new toolkit to augment their artistic expression. This advancement addresses previously existing limitations in sound synthesis that often restricted creativity and innovation in audio production.

As the realm of digital content continues to evolve, the integration of AI into audio engineering is becoming increasingly significant. NVIDIA’s new AI audio model exemplifies this trend, paving the way for new generations of audio creatives to explore uncharted territories. It sets the stage for further exploration into sound synthesis, providing a versatile solution for both professionals and enthusiasts alike. The innovations within this model signal a transformative shift in how sound can be crafted, enabling users to produce original audio content with remarkable precision and depth.

In the subsequent sections of this blog post, we will delve deeper into the capabilities of NVIDIA’s AI audio model, its applications across different industries, and the potential it holds for the future of audio creativity. With NVIDIA leading the charge in harnessing AI for audio, the possibilities appear boundless.

Mechanics of Sound Synthesis

NVIDIA’s AI audio model employs advanced algorithms and neural networks to revolutionize sound synthesis, enabling the creation of unique audio experiences that were previously unimaginable. At the core of this innovative model lies deep learning, a subset of machine learning that utilizes vast datasets to train artificial neural networks. These networks mimic human cognitive processes, allowing the AI to understand and generate complex audio patterns.

The training phase involves feeding the model a broad spectrum of audio data, including different sound types, timbres, and frequencies. This diverse data set enables the AI to learn the relationships between various audio features, allowing it to synthesize new sounds. Unlike traditional sound modeling techniques, which often rely on pre-recorded samples and physical modeling of instruments, NVIDIA’s approach emphasizes creativity and originality. The AI creates audio signals by generating novel combinations of sound elements that have never been heard before.

A pivotal aspect of the model is its ability to utilize generative adversarial networks (GANs), which consist of two parts: a generator and a discriminator. The generator creates new audio samples, while the discriminator evaluates their authenticity. This adversarial relationship drives both components to improve continuously, resulting in higher quality sound synthesis over time. Additionally, this approach allows for the exploration of vast soundscapes, enabling artists and designers to experiment with audio in ways that were previously limited to human ingenuity.

Moreover, NVIDIA’s AI technology includes methods such as signal processing and time-frequency analysis, which enhance the model’s ability to create rich, textured audio. By examining the nuances of sound characteristics, the model can manipulate audio waves in real-time, allowing for dynamic soundscapes. This synthesis technique demonstrates a significant advancement in how we understand and generate sound, highlighting the transformative potential of AI in audio creation.

Applications and Implications

NVIDIA‘s innovative AI audio model presents a plethora of applications across multiple industries, fundamentally transforming the landscape of sound production and design. In gaming, for instance, the ability to generate realistic audio environments can significantly enhance the player experience. Game developers now have the means to craft immersive soundscapes that adapt dynamically to player actions, making each gameplay session unique. This level of intricacy provides not just a richer auditory experience but also deepens player engagement, as sound becomes an integral part of the storytelling process.

In the realm of filmmakers, the versatility of NVIDIA’s AI audio model allows for the creation of nuanced soundtracks and realistic sound effects. Filmmakers can generate a variety of audio elements that align perfectly with visuals, thereby fostering deeper emotional connections with audiences. This technology might also streamline the post-production process by reducing reliance on extensive sound libraries and enabling more precise matching of sounds to scenes.

The music production industry stands to gain immensely from this AI audio capability as well. Musicians and producers can experiment with sound in unprecedented ways, creating compositions that extend beyond traditional boundaries. The ability to synthesize unique audio outputs and manipulate sounds with AI opens avenues for innovative musical expressions that were previously limited by technological constraints.

Additionally, this AI audio technology has significant implications for accessibility in sound design. By democratizing the process, individuals without extensive technical backgrounds can now experiment with audio creation, empowering a diverse range of creators. This not only fosters inclusivity but also encourages a broader spectrum of artistic expression. As these tools become more accessible, they are likely to catalyze a new wave of creativity, bridging gaps between professional sound designers and aspiring artists around the world.

Future of AI in Audio Creation

The future of artificial intelligence in audio creation stands at a fascinating crossroads, shaped significantly by innovations in technology such as NVIDIA’s AI audio model. As this field continues to evolve, we can anticipate a range of developments aimed at enhancing the quality and efficiency of sound synthesis. One of the most promising advancements involves improving the accuracy with which AI can interpret human emotion and context. This capability could allow AI systems to create sounds that resonate deeply with listeners, offering a more immersive audio experience than ever before.

The potential application of NVIDIA’s technology in diverse domains, including music production, games, and film, is significant. These advancements allow creators to push the boundaries of sound design and offer innovative tools that can craft complex soundscapes from mere concepts. Moreover, the incorporation of machine learning algorithms in the audio synthesis process is expected to refine the effectiveness of AI models, enabling them to produce high-fidelity sound outputs that blend seamlessly into artistic works.

As AI continues to forge its path in audio, ethical considerations will also come to the forefront. Issues surrounding copyright, authorship, and the implications of AI-generated content must be addressed, ensuring that industry stakeholders navigate these challenges responsibly. NVIDIA, with its commitment to ethical AI practices, is likely to play a key role in establishing guidelines and frameworks that promote the responsible use of AI technologies in creative industries.

Ultimately, as research areas expand, we can expect to see even more sophisticated applications of AI in audio spaces. The convergence of creativity and technology not only promises to empower artists but also sparks critical discussions about the future landscape of sound creation. As innovations from companies like NVIDIA continue to unfold, it is essential to maintain a focus on ethical dimensions and societal impacts while exploring the limitless possibilities of AI audio.