In a world increasingly defined by digital connectivity and intelligent automation, the convergence of Artificial Intelligence (AI) and the Internet of Things (IoT) stands as a transformative force. These two technological powerhouses are shaping environments that can adapt, learn, and respond to human needs in real-time. From personalized healthcare and smart city infrastructure to autonomous vehicles and industrial automation, AI-powered IoT ecosystems promise unprecedented convenience, efficiency, and economic growth.

However, this integration also introduces a new array of ethical and security challenges. As devices collect, analyze, and act upon massive amounts of data, concerns about privacy, transparency, algorithmic fairness, cyber threats, and regulatory compliance come to the forefront. Stakeholders—ranging from developers and enterprises to policymakers and consumers—must navigate these complex issues thoughtfully. By understanding and addressing these challenges, we can ensure that the benefits of AI-powered IoT ecosystems do not come at the cost of compromised ethics, trust, or personal security.

1. Understanding AI-Powered IoT Ecosystems

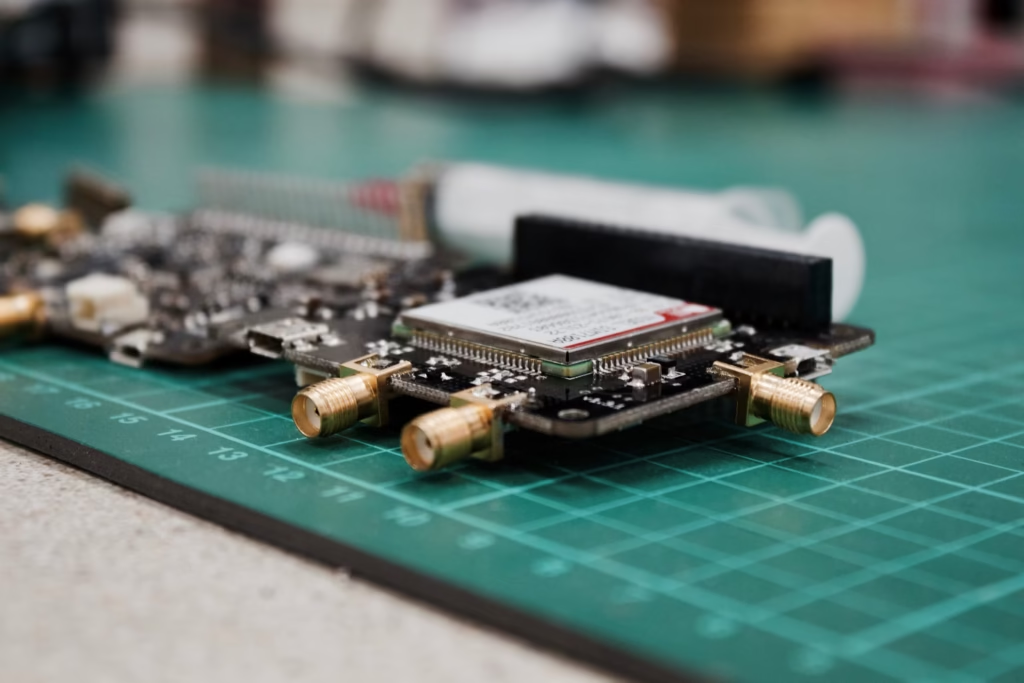

AI-powered IoT ecosystems involve an intricate interplay of sensors, connected devices, data analytics, and machine learning models. IoT devices gather real-time data—such as temperature, location, usage patterns, and biometrics—from the environment. AI systems then process this data, discovering patterns, making predictions, and automating decisions. This synergy enables a level of responsiveness and functionality unimaginable just a decade ago.

Smart home devices that learn occupants’ schedules to optimize energy usage, manufacturing robots that predict machine downtime before it occurs, and traffic management systems that alleviate congestion by analyzing real-time conditions—all exemplify how AI and IoT working in tandem can streamline workflows. These technologies ultimately have the potential to improve living standards, boost productivity, and fuel innovation across sectors.

Yet as these systems advance, the complexities multiply. Greater autonomy in decision-making, a rise in data-sharing networks, and the spread of interconnected devices raise pivotal questions: How do we safeguard user privacy when devices are always “listening” or “sensing”? How do we ensure algorithms making critical decisions—like medical diagnoses or mortgage approvals—are fair and unbiased? And how can we defend these vast networks against cyber threats?

2. Ethical Considerations in AI-Powered IoT Ecosystems

2.1 Data Privacy and Informed Consent

At the heart of the ethical debate lies the issue of data privacy. AI-powered IoT ecosystems thrive on data—collected continuously, often from highly personal sources. Smart fitness trackers monitor health metrics, voice assistants record conversations, and connected cameras watch over our homes. While these devices promise convenience and personalized services, the potential for misuse or unauthorized access to this data cannot be ignored.

Privacy hinges on transparent data practices and informed consent. Users should understand what data is collected, how it’s used, who has access to it, and for what purposes. Achieving true informed consent can be challenging when devices are complex and data handling policies are lengthy or opaque. Clear communication, simplified privacy notices, and explicit user opt-in mechanisms are crucial to maintaining trust. Moreover, anonymization and data minimization practices can help protect individual identities while still enabling AI-driven insights.

2.2 Algorithmic Bias and Fairness

As machine learning models interpret data to make decisions, they may inadvertently perpetuate biases present in the training data. When IoT devices feed these models data drawn from different demographics and environments, the risk of skewed outcomes arises. For instance, smart home security systems might be less accurate at recognizing certain ethnic groups, or predictive maintenance algorithms in factories might prioritize efficiency over worker safety.

To address these concerns, developers and data scientists must actively seek to identify and correct biases. Techniques such as fairness metrics, bias detection tools, and diverse training datasets can help ensure AI models reflect equitable standards. Regular audits and external peer reviews can also maintain accountability, fostering an environment where fairness becomes a core design principle rather than an afterthought.

2.3 Transparency and Explainability

In a complex AI-driven IoT environment, users and regulators often demand a clear understanding of how decisions are made. Explainability involves shedding light on the logic behind AI conclusions, enabling stakeholders to trust that outcomes are not arbitrary. Whether it’s a smart thermostat adjusting home temperatures or an AI-driven health assistant diagnosing a condition, explainability fosters trust and allows stakeholders to challenge or correct errors.

Explainable AI (XAI) techniques offer ways to provide interpretable results. Visualizing decision pathways, using simpler, more transparent models where possible, and employing post-hoc explanation tools can help demystify AI processes. As regulations increasingly stress the importance of transparency—particularly in critical domains like finance, healthcare, and governance—embracing explainability is not just ethical but strategically sound.

2.4 Labor Market Disruption and Human-Centric Design

The automation of tasks once performed by humans raises concerns about job displacement and the future of work. While AI-powered IoT ecosystems can create new jobs—like data science roles or IoT infrastructure management—they may also render certain positions obsolete. Ethically, stakeholders should prioritize human-centric design, ensuring technology complements human capabilities rather than solely replacing them.

Strategies like upskilling and reskilling programs, universal basic income, and policies that encourage collaborative, human-in-the-loop AI systems can help mitigate the negative societal impact of automation. Aligning AI and IoT development with human values ensures that these technologies serve as tools to enhance, rather than diminish, human livelihoods and well-being.

3. Security Challenges in a Connected World

As IoT devices become ubiquitous, they broaden the digital attack surface, making the security of AI-powered ecosystems a pressing concern. From household appliances to critical infrastructure, vulnerabilities can lead to data breaches, operational shutdowns, or the manipulation of sensitive systems.

3.1 Cybersecurity Vulnerabilities and Device-Level Threats

IoT devices often suffer from minimal built-in security. Many operate with default passwords, outdated firmware, or weak encryption protocols. Compromised cameras, smart locks, or connected medical devices can open backdoors for cybercriminals. Once attackers gain access, they can move laterally through networks, exfiltrating data or holding systems hostage.

Adopting a security-by-design mindset is paramount. Manufacturers should implement secure boot processes, robust encryption, and frequent firmware updates. Adhering to international standards like the ETSI EN 303 645 or the NIST IoT Cybersecurity Framework can help ensure devices are resilient against attacks. Meanwhile, multi-factor authentication and passwordless solutions can harden user access.

3.2 Data Integrity and Ransomware Threats

AI-powered IoT systems rely heavily on data integrity. Tampering with sensor outputs or input data can produce incorrect predictions and harmful actions. A smart energy grid might distribute power sub-optimally if fed manipulated data, or autonomous vehicles could be misled into dangerous maneuvers by falsified signals.

Ransomware attacks—where threat actors encrypt critical data to demand payment—pose a severe risk to IoT-driven environments. Stricter data validation protocols, anomaly detection algorithms, and secure data storage solutions can mitigate such threats. Routine backups, network segmentation, and zero-trust architecture further reduce the potential fallout of a compromise.

3.3 Supply Chain Vulnerabilities

IoT devices are often assembled from components sourced globally. This creates a complex supply chain with multiple layers of vendors, subcontractors, and transporters. Each link presents a potential vulnerability: counterfeit chips, tampered firmware, or hidden backdoors could infiltrate systems undetected. Such compromises have implications not only for private consumers but also for critical infrastructure like power plants, healthcare facilities, and defense networks.

Addressing supply chain vulnerabilities requires rigorous vendor vetting, transparency about component provenance, and third-party audits. Companies must also consider “hardware root of trust” approaches—embedding cryptographic anchors within components to verify their authenticity and integrity.

3.4 Nation-State and Industrial Espionage

As IoT networks expand, they become attractive targets not just for criminal organizations but also for nation-states and industrial espionage. State-sponsored actors might aim to disrupt infrastructure, steal intellectual property, or influence geopolitical outcomes. Continuous threat intelligence monitoring, international cyber norms, and intergovernmental cooperation are essential to combat such sophisticated threats.

4. Regulatory Frameworks and Industry Standards

With the rapid proliferation of AI-powered IoT ecosystems, policymakers and industry bodies are working to establish guidelines that safeguard public interests. Regulations must strike a delicate balance between enabling innovation and protecting privacy, security, and fundamental rights.

4.1 Current Legislation and Emerging Frameworks

The European Union’s General Data Protection Regulation (GDPR) sets a global benchmark for data protection and privacy. While it does not specifically target IoT or AI, its principles—data minimization, consent, and breach notification—apply broadly. Emerging AI-specific regulations in the EU, such as the EU AI Act, aim to classify AI applications by risk level and impose obligations accordingly.

Other regions and countries also develop their regulatory landscapes. The United States has proposed frameworks encouraging voluntary cybersecurity standards, while China and Japan draft policies emphasizing data sovereignty and national security. As these regulations evolve, global consensus and interoperability become critical to preventing conflicting mandates that hamper cross-border commerce and innovation.

4.2 Industry Standards and Certification Programs

Industry consortia and standardization bodies—like the IEEE, ISO, or the Industrial Internet Consortium—play a vital role in shaping best practices. They develop technical guidelines for device security, data formats, and interoperability. Voluntary certification programs can indicate that products meet certain ethical and security benchmarks, instilling consumer confidence.

Adherence to recognized standards helps level the playing field, encouraging competition based on quality rather than cost-cutting at the expense of security. Clear standards also reduce the burden on regulators, as compliance can be more easily verified and enforced.

5. Best Practices for Stakeholders

Addressing the ethical and security challenges in AI-powered IoT ecosystems requires a collective effort. Developers, businesses, policymakers, and consumers must work together to ensure responsible innovation.

5.1 For Businesses and Developers

Security-by-Design:

Integrate security considerations from the earliest stages of product development. Regular code reviews, penetration testing, and vulnerability scans can identify weaknesses before deployment.

Ethical AI Frameworks:

Adopt frameworks and toolkits that help detect and mitigate bias. Conduct frequent audits and collaborate with ethicists, social scientists, and civil society organizations to maintain unbiased algorithms.

User-Centric Privacy Controls:

Provide clear, accessible user interfaces for managing data permissions. Offer granular control, enabling users to selectively share data and opt out easily.

Continuous Improvement and Transparency:

Foster a culture of continuous learning and improvement. Document AI model logic, maintain records of decision-making processes, and communicate changes transparently to users.

5.2 For Governments and Policymakers

Balanced Regulations:

Create flexible, outcome-focused regulations that protect citizens without stifling innovation. Mandate security and privacy requirements while allowing room for technological evolution.

Public-Private Partnerships:

Collaborate with the private sector, academia, and civil society to develop policies informed by real-world expertise. Joint task forces and advisory panels ensure diverse perspectives are considered.

Invest in Education and Training:

Support programs that educate citizens about digital literacy, privacy rights, and cyber hygiene. A well-informed population is better equipped to make responsible choices in a connected world.

International Cooperation:

Promote harmonized standards and global cooperation on cybersecurity, data protection, and AI ethics. International treaties and alliances can deter nation-state attacks and foster trust.

5.3 For Consumers

Informed Decision-Making:

Research devices before purchasing. Read privacy policies, check for reputable brands that adhere to recognized standards, and stay informed about security updates.

Cyber Hygiene:

Change default passwords, enable two-factor authentication, and update firmware regularly. Simple steps can greatly reduce vulnerability to common attacks.

Advocacy and Feedback:

Consumers can drive change by voicing concerns, supporting responsible brands, and calling for better regulations. Collective demand for ethical, secure products incentivizes industry improvements.

6. Balancing Innovation with Responsibility

The future of AI-powered IoT ecosystems offers immense potential—imagine autonomous transportation reducing accidents and pollution, wearable health sensors enabling early disease detection, or precision agriculture ensuring global food security. To realize these benefits sustainably, ethical and security considerations must remain at the forefront.

Open Research and Collaboration:

Open-source platforms and research collaborations can facilitate the sharing of best practices, tools, and threat intelligence. Transparency in AI and IoT development encourages creativity and helps prevent isolated pockets of risk.

Future-Proofing Technologies:

As quantum computing emerges, traditional encryption methods may become obsolete. Preparing for quantum-resistant cryptography now ensures long-term data protection. Similarly, advancements in homomorphic encryption and differential privacy techniques can secure data while still allowing analytics.

Consumer and Stakeholder Engagement:

An engaged citizenry and informed stakeholders act as checks and balances. Businesses that genuinely listen to user feedback and comply with regulations can differentiate themselves by offering ethical, reliable products. Policymakers who consult with industry experts and civil society groups create more relevant, effective legislation.

7. Conclusion

The integration of AI and IoT has ushered in a new era of possibility, linking the digital and physical realms in unprecedented ways. This transformation, while promising, raises profound ethical and security questions. Navigating these challenges is not a straightforward task—it requires thoughtful design, continuous adaptation, and cooperation among all stakeholders.

Data privacy, algorithmic fairness, transparency, and labor market dynamics must be considered as ethical pillars, guiding the responsible development of AI-powered IoT ecosystems. Concurrently, robust security measures—encompassing device-level protections, secure supply chains, and resilient cybersecurity infrastructures—are essential to safeguarding the public interest.

Policymakers, industry leaders, developers, and consumers all have pivotal roles to play. By working together to establish balanced regulations, adopt best practices, and invest in education and outreach, we can strike a delicate equilibrium. Such an approach ensures that AI-powered IoT ecosystems remain tools of empowerment rather than instruments of harm.

As we move forward, the ultimate goal is to foster environments where innovation thrives responsibly. By embracing ethical principles, robust security, and global collaboration, we can shape a future in which AI-powered IoT ecosystems deliver on their immense promise—enhancing quality of life, driving economic growth, and upholding the trust and well-being of all.