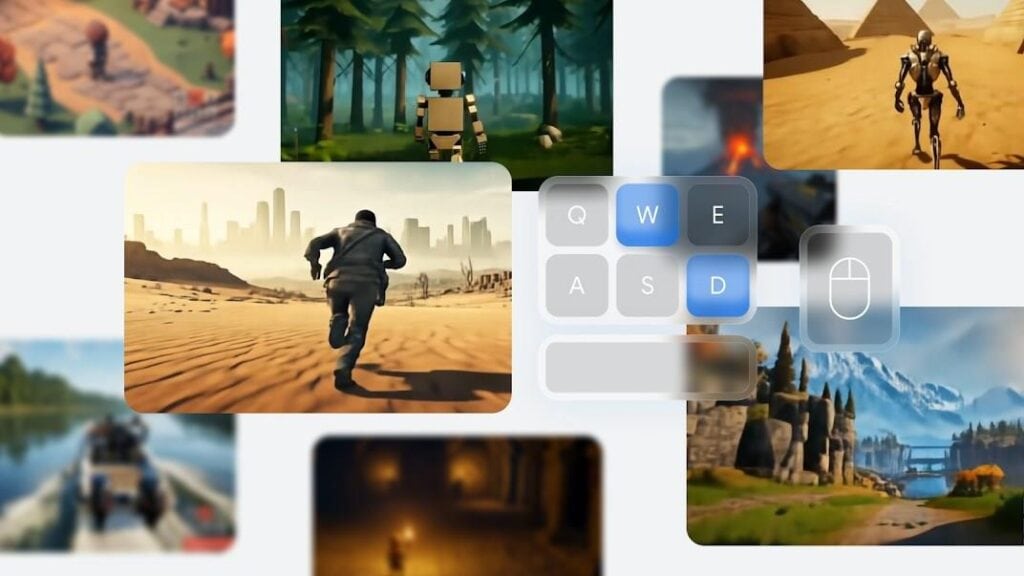

Earlier this year, Google DeepMind announced Genie, an artificial intelligence tool that can create playable 2D games based on images generated from a text prompt or real images. The company has now announced Genie 2, which can produce playable 3D games by improving its artificial intelligence tool.

It Can Model Many Real-World Effects 3D Game

DeepMind’s Genie 2 is not a game engine, but a diffusion model that generates images as the player (a human or other AI agent) moves through the world simulated by the software. As Genie 2 generates images, it can reveal details about the environment. This gives it the ability to model effects such as water, smoke, light and physics. The model can render scenes from first-person, third-person or isometric perspectives.

Genie 2 can remember parts of a simulated scene even after they have left the player’s field of view, and accurately recreate them when they reappear.

However, Genie 2 does have some limitations. According to DeepMind, the model can produce consistent scenes for up to 60 seconds. Published example videos are much shorter, around 10 to 20 seconds. In addition, when Genie 2 has to maintain a consistent illusion of the world for longer, distortions occur and image quality degrades. DeepMind has not revealed details of how Genie 2 trains, other than to say that it is based on a large video dataset.

Still in the experimental phase, Genie 2 is unlikely to be available next year, but it has the potential to revolutionise the gaming world in the future. For now, the company sees the model as a tool for training and evaluating other AI agents, including its own SIMA algorithm. It will also act as a tool for artists and designers to quickly prototype and experiment with their ideas. DeepMind expects world models such as Genie 2 to play an important role in the development of artificial general intelligence in the coming years.